My research is focusing on articulatory modelling for speech production, 3D anatomical modelling, medical imaging, 3D reconstruction of the musculoskeletal system, subject-specific modelling, signal and image processing, computer graphics, speech.

This research is highly multidisciplinary, involving collaborations with engineers, signal and image processing scientists, medical doctors, computer scientists, acousticians, paleoanthropologists, speech therapists, etc.

Models of speech production

Keywords:

- Articulatory modelling

- Speech disorders

- Dysarthria

- Medical imaging, MRI

- Speech production

- Acoustics

- Inter-speaker variability

- Multi-speaker modelling

Virtual reality simulator and assistant for regional anaesthesia

Building of a virtual reality simulator and assistant for regional anaesthesia in the context of the European project RASimAs.

Related keywords:

- 3D anatomical modelling

- Patient-specific models

- Medical imaging

- Image registration

- Virtual reality

3D growth anatomical modelling of the fetus for dosimetry study

The goal of the projects consists in building a 3D articulated model of the fetus from ~10 to ~40 weeks for industrial, teaching and medical research puposes.This project is conducted in collaboration with other academic institutions, clinicians and industrial partners.

This work was part of the FETUS project, part of the FEMONUM projects.

Do not hesitate to visit the publications page for futher details or to send me an email.

Related keywords:

- 3D anatomical modelling

- Growth fetus modelling

- Articulated fetus

- Skeleton driven deformation

- Biomedical Images

- Mesh deformation

3D patient specific bone reconstruction from biplanar radiography

In a highly multidisciplinary collaboration between engineers, clinicians, physicists and the company founded by Nobel Prize winner Georges Charpak to develop his results on energy physics, the Georges Charpak Human Biomechanics Institute has taken part into the development of the ultra low dose 2D/3D X-ray imager EOS. This innovative technique allows the recording of two stereo front and side full body x-rays, as visible on the following figure.

| |

|

|

Associating my own experience of 3D reconstruction and modelling of the human body and the strong field of expertise in 3D reconstruction of the skeleton from EOS images developed at LBM, my research project consisted of developping a process of reconstruction of the lower limb bony structures in 3D and in volume from EOS images:

|

|

|

|

|

My work was part of the project SterEOS+ funded by the competitiveness cluster Medicen.

Keywords related to my research:

- 3D volume reconstruction

- 3D reconstruction of lower limbs

- Bone reconstruction

- Cortical and spongy bone

- Biplanar stereo radiography

- Biomedical images

- Bone modelling

- Volume modelling

Modelling of the vocal tract for speech evolution

After the famous study published by Lieberman & Crelin in 1971 claiming that Neanderthals could not have a speech repertoire as developped as modern human due to vocal tract shape limitations (in particular his larynx high position estimated by the authors), number of contradictory studies have been published. From the 80s until now, this issue has been regularly updated by new approaches or discoveries: discovery of a hyoid bone, new reconstitution of a Neandertal fossil, new articulatory modelling or genetic arguments.

This study, resulting of a strong collaboration between paleoanthropologists and speech engineers, intended to bring new evidences in this debate by means of modelling contributions. More particularly, we investigated the hypothesis that the speech movements may evolve from the feeding movements, both in phylogeny and ontogeny. The speech and feeding models we have built and the cross comparison we have carried out showed support for this hypothesis.

This work was part of the European project HandToMouth.

Do not hesitate to visit the publications page for futher details.

3D articulatory-acoustic modelling of the vocal tract

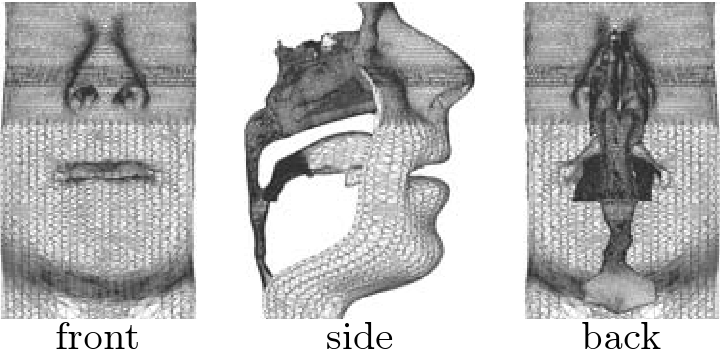

In the general framework of virtual talking head development, this works fits onto the study of nasality in speech production. Nasal phonemes, presents in around 99% of the world languages, are produced by a lowering of the velum which allows air flow and propagation wave to come out through the nasal tract. This simple gesture has complex acoustic effects.

In this contaxt, the objective of my work was:

1. to build a 3D data-based articulatory model of the nasal tract

2. to characterize acoustically the movements of the velum

3D articulatory-acoustic model of the nasal tract

The model charecteristics were:Fonctional : In opposition togeometrical orbiomechanical models,fonctional models intends to make emerge from data the movements and the shapes of the articulators. It has the drawback to be dependant of the recording task, but the advantage to avoid introduction ofa priori movements and shapes in the model.Three-dimensional : In order to fully describe and understand nasal tract and gestures, the model has been developped in 3D.Organ-based : Theorgans , orarticulators , are considered to be the unit elements of the vocal tract. For an accurate modelling, theorgans , in opposition to thetract , are thus independently modelled (and more precisely their shape).Single subject : As a first attempt to develop such a model, only one subject has been considered in the study.

1. the posterior region, from the connection point with the oral tract to the seperation point of the tract into two choanae, is composed of the velopharyngeal port and the cavum and is surrounded of deformable tissues, that is the velum and the pharyngeal walls.

2. the anterior region, from the seperation point of the tract into two choanae to the nostril outlet, is composed of the two undeformable nasal passages. To these passages are moreover connected various paranasal sinuses.

The anterior region,

The posterior region,

Two videos corresponding to each of the two articulatory parameters of the velopharyngeal port are shown below. The left figures represent a 3/4 anterior view of the velum, the lower figure representing only an half side (3/4 posterior view for the upper-left figure of VS); the upper-right figure shows a midsagittal view (in blue the velum, in red the posterior pharyngeal wall) and the two lower-right figures display transverse views in the plans represented by two black lines on the upper-right figure.

Video 1 (*.avi) = VL parameter

Video 2 (*.avi) = VS parameter

In order to model realist movements, dynamic data (EMA recordings) have been used to control the articulatory model of the velopharyngeal port. Here is a video of the synthesized velum movement corresponding to real movements of velum during the sequence [pε~pε~pε~bε~pε~mε~] (like in "pinpinpinbinpinmin" in French). The right figure represents a 3/4 anterior view of the velum; the left figure displays in the midsagittal plane the trajectory of the red point visible on the right figure, the background point cloud representing all the positions taken by this point during the recording of the dynamic data. Note that the sound is the real sound recorded and synchronized with the synthesized movement.

Video 3 (*.avi) = Synthesized velum movement

Acoustic characterization of the velum movements

These two parameters have been acoustically characterized in the low frequencies using an electric analog planar wave acoustic propagation model. It has been showed that the acoustic effect of VL can be ascribed to the joint variation of the geometries of the oral and nasal tracts (and more precisely in the nasal tract of the velopharyngeal port geometry) while the acoustic effect of VS can be mainly ascribed to variation of the geometry of the nasal tract alone (of the velopharyngeal port as well).Moreover, in the context of my position as assistant lecturer at ICP in 2007, the 3D nasal tract model (3D mesh of the velopharyngeal port and of the nasal passages) has been integrated into a complete 3D mesh of the vocal tract (see figure below). A comparative study between 2D and 3D acoustic propagation in the case of nasality as been started in collaboration with Hiroki Matsuzaki and Kunitoshi Motoki from Hokkai-Gakuen University (Japon).

Finally, again in the context of my position as assistant lecturer, the geometry and the acoustical effects of the sinus maxillaris and piriformis have been assessed.

For more details...

For more details about this work:- Find here abstracts and keywords of my PhD in English and in French

- Download here

my PhD thesis manuscript (in color pdf) [Warning: French only, 24.2 MB]

my PhD thesis manuscript (in color pdf) [Warning: French only, 24.2 MB] - Download here

my PhD presentation (in color pdf) in English [Warning: 6 MB]

my PhD presentation (in color pdf) in English [Warning: 6 MB] - Download here

my PhD presentation (in color pdf) in French [Warning: 6 MB]

my PhD presentation (in color pdf) in French [Warning: 6 MB] - See my publications page

In addition to my personal publications, here are some publications related to the vitual talking heads (into which this research fits) developped at the Department of Speech and Cognition (ex-ICP) of GIPSA, a description and an example of application:

Pierre Badin, Gérard Bailly, Frédéric Elisei, and Matthias Odisio. Virtual Talking Heads and audiovisual articulatory synthesis. In D. Recasens M.-J. Solé and J. Romero, editors, Proceedings of the 15th International Congress of Phonetic Sciences, volume 1, pages 193-197, Barcelona, Spain, 2003.

Yuliya Tarabalka, Pierre Badin, Frédéric Elisei and Gérard Bailly. Can you "read tongue movements"? Evaluation of the contribution of tongue display to speech understanding In Actes de la 1ère Conférence internationale sur l'accessibilité et les systèmes de suppléance aux personnes en situation de handicaps (ASSISTH'2007) , Toulouse, France, 2007.